1-800-ChatGPT is a Phishing Disaster Waiting to Happen

How OpenAI's latest feature might open the door for cybercriminals.

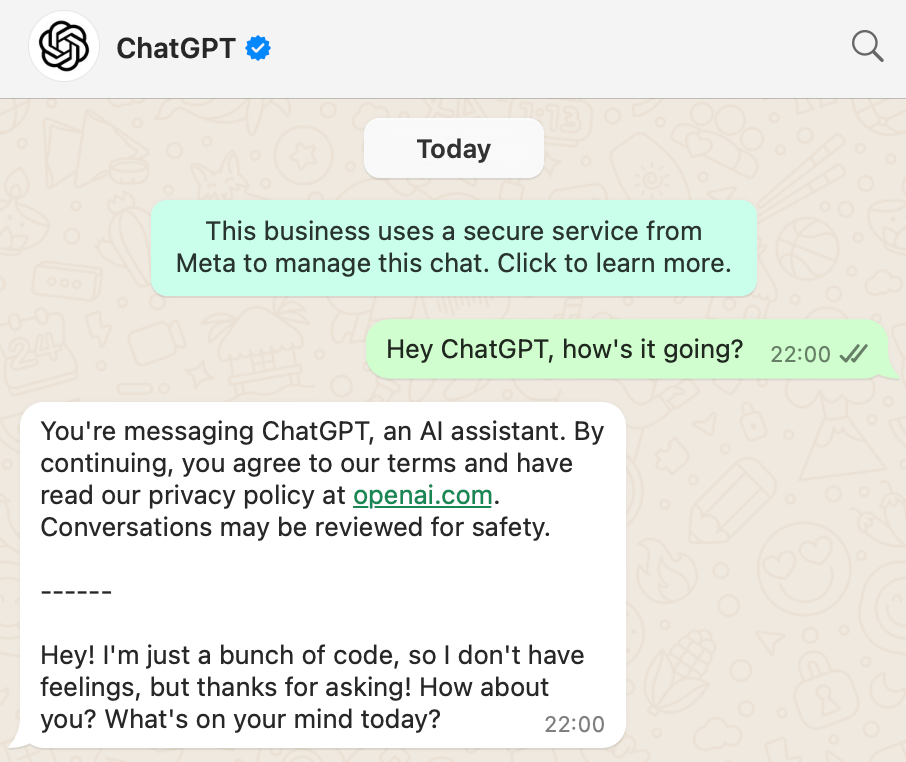

OpenAI recently introduced a groundbreaking feature that allows users to interact with ChatGPT via phone calls and WhatsApp messages. This innovation, detailed in their official announcement, promises convenience and accessibility. However, it also raises significant security concerns, particularly regarding phishing scams.

The Potential for Phishing

Cybercriminals are notorious for taking advantage of human mistakes, and the potential for abuse here is staggering. By creating fake phone numbers that closely resemble the official 1-800-ChatGPT line, scammers could easily trick unsuspecting users into giving away sensitive information. Typos in phone numbers, a common mistake, could lead users directly into the hands of hackers pretending to be ChatGPT representatives.

The Problem with Typosquatting

Typosquatting, a tactic where attackers use misspelled versions of legitimate domains or contact points, is not new. In the context of 1-800-ChatGPT, a single incorrect digit in the phone number could redirect users to a fraudulent service. Once connected, these fake services might ask for personal details, financial information, or other sensitive data under the guise of helping with ChatGPT queries.

Who Is Most at Risk?

Certain groups are more likely to fall victim to these scams. Elderly people, who may struggle with technology or make frequent typos, are particularly vulnerable. Additionally, individuals who do not speak the language fluently may have difficulty recognizing subtle differences in numbers or understanding the scam's warning signs. These groups need extra support and clear instructions to avoid falling prey to such attacks.

What OpenAI Should Have Done

While the feature itself is innovative, OpenAI could have taken proactive measures to mitigate these risks. For example:

- Purchasing phone numbers with common typos and redirecting them to the official line.

- Implementing robust public awareness campaigns to educate users about potential scams.

- Providing a verification system within WhatsApp to confirm the authenticity of the ChatGPT account.

How Users Can Protect Themselves

While OpenAI works on addressing these vulnerabilities, users must remain cautious. Here are some tips to stay safe:

- Double-check the phone number before dialing or messaging.

- Never share sensitive information unless you are certain of the recipient's identity.

- Scan the official QR code from the OpenAI website to ensure you are connecting with the legitimate ChatGPT chatbot.

- Report any suspicious activity to OpenAI immediately.

Conclusion

The introduction of 1-800-ChatGPT marks a significant step forward in making AI accessible to the masses. However, with great innovation comes great responsibility. By addressing the security flaws inherent in this system, OpenAI can ensure that their technology empowers users without exposing them to unnecessary risks. Until then, users must exercise caution and remain aware of the potential for phishing scams.