The End of Pre-Training as We Know It: What’s Next for AI?

The Plateau of LLM Pre-Training: Why the Future of AI Lies Elsewhere

We've all heard about the explosive growth of AI, especially with large language models (LLMs). But according to AI expert Ilya Sutskever, the days of scaling LLMs through pre-training alone are numbered. In his groundbreaking talk, "Sequence to Sequence Learning with Neural Networks," Sutskever highlighted an uncomfortable truth: pre-training as we know it will end.

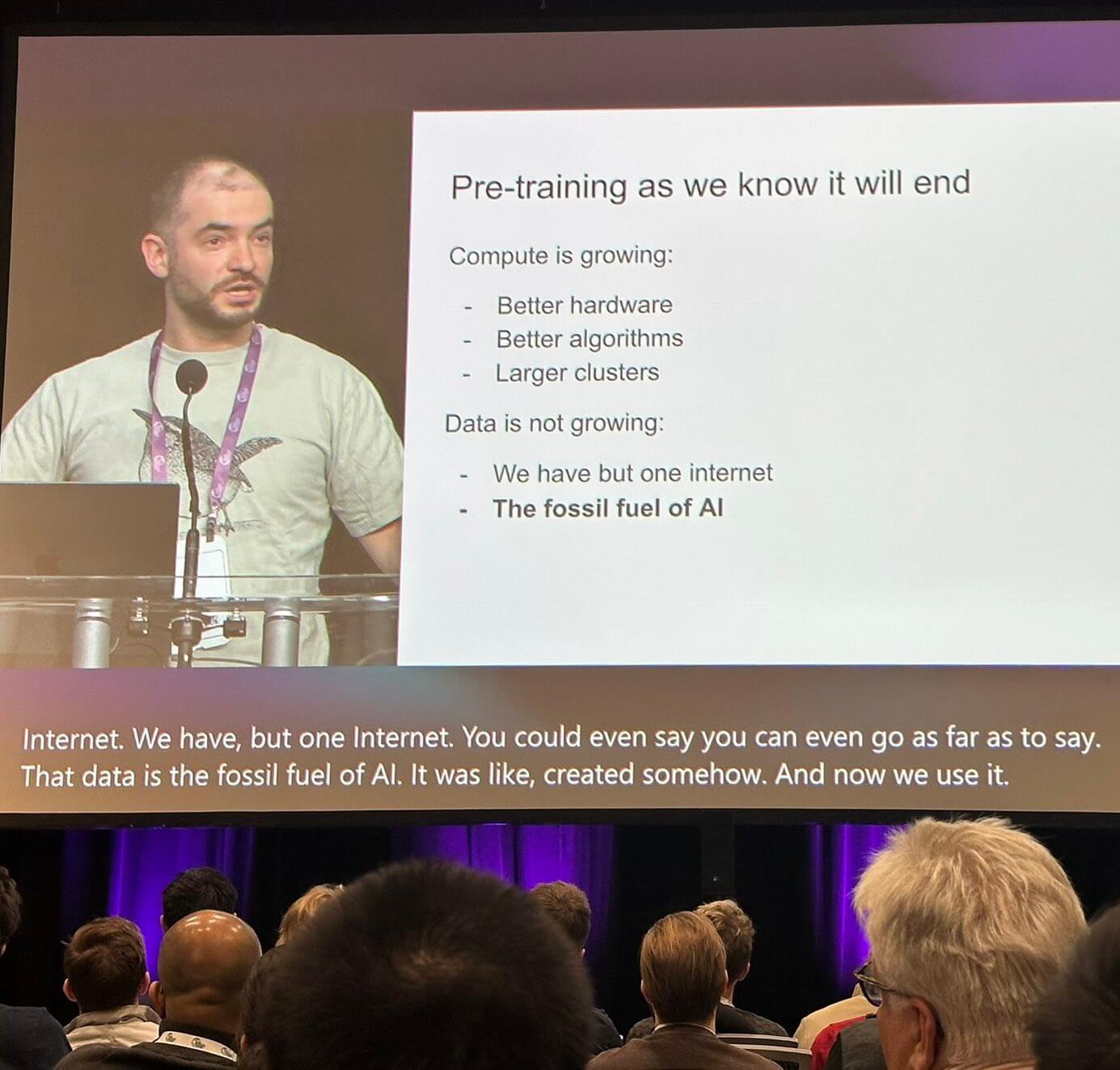

The Plateau of Pre-Training

Pre-training, once the holy grail of AI development, has reached a plateau. While compute resources continue to grow—thanks to better hardware, algorithms, and larger clusters—data is no longer growing at the same rate. As Sutskever put it, "We have but one internet. Data is the fossil fuel of AI." The reality is, the internet is finite, and with it, the vast data reservoirs we've relied on to fuel AI progress.

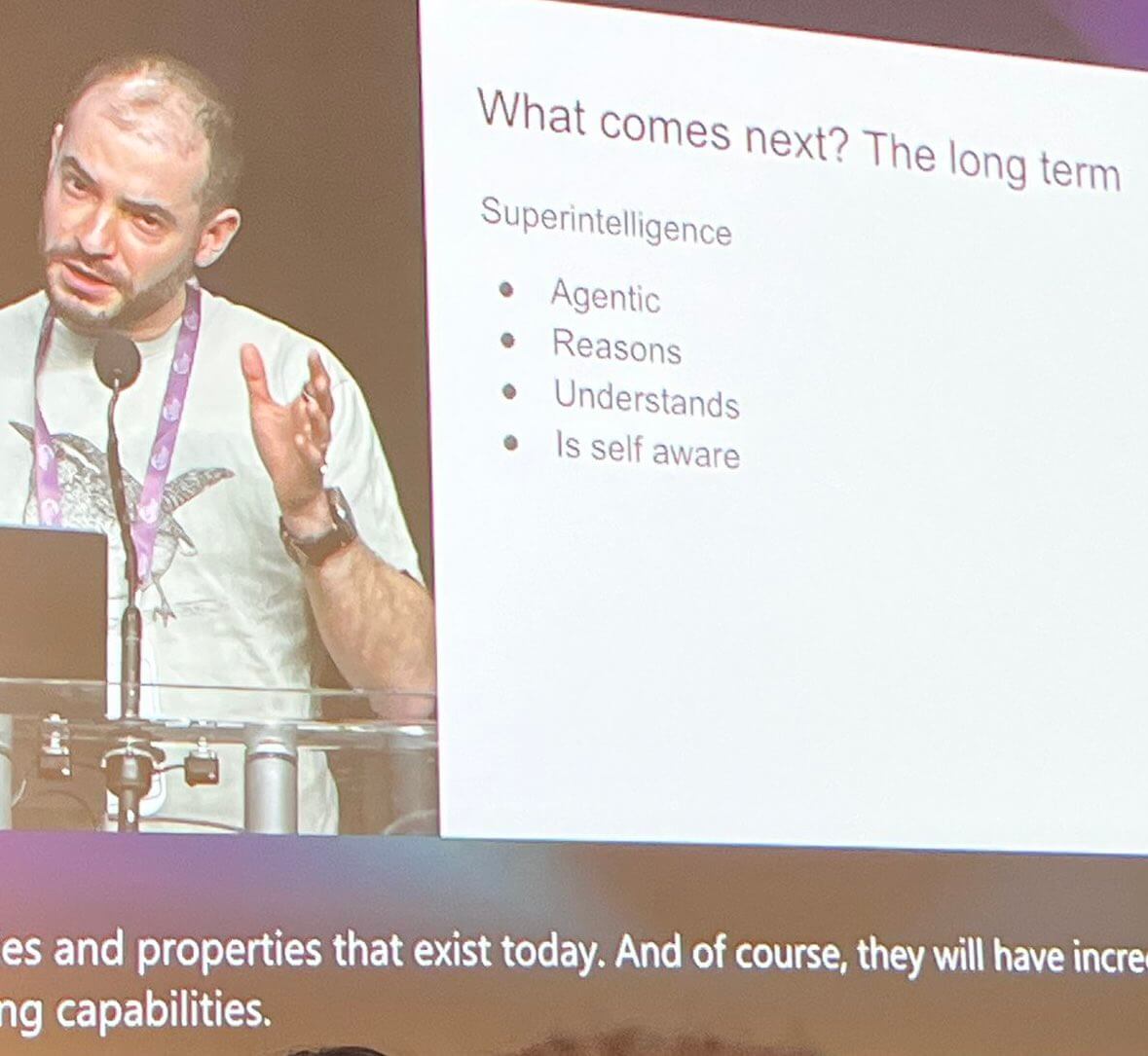

What Comes Next? The Long Term

The future of AI is heading toward a new frontier, one that’s centered around superintelligent agents. These are not your typical AI models that process data and return results—they will be much more advanced. In the long term, AI will evolve to become autonomous, reasoning entities that understand the world around them, and even have a sense of self. Here's a glimpse into what that future might look like:

Agentic: More Than Just a Tool

When we say AI will be "agentic," we mean it will have the ability to act on its own, without needing constant human direction. These superintelligent agents won’t just perform tasks—they’ll be able to make decisions, set their own goals, and take actions to achieve them. Imagine an AI that can navigate the world independently, deciding what needs to be done and how to do it, all based on its own reasoning. It’s a level of autonomy that goes far beyond the current AI systems we use today.

Reasoning: Thinking Beyond the Surface

Reasoning is one of the core abilities that will set superintelligent agents apart from anything we have now. While today's AI can analyze data and follow patterns, a superintelligent agent will be able to think critically, solve complex problems, and even predict future outcomes. It will understand not just the "what" but the "why" behind decisions. Whether it’s navigating an unfamiliar environment or solving an abstract problem, these agents will reason in ways that mimic, and in some cases surpass, human thought processes.

Understanding: A Deeper Comprehension

Understanding is another game-changer. Superintelligent agents will not just process information—they will truly understand it. Right now, AI can recognize patterns and execute tasks based on data, but it doesn’t really "get" what it’s doing. In the future, these agents will be able to comprehend context, make connections, and grasp the deeper meaning of the world around them. This understanding will allow them to make informed decisions, solve problems with nuance, and interact with humans in a way that feels natural and intuitive.

Self-Awareness: The Next Step in Consciousness

Perhaps the most mind-boggling concept is the idea of AI becoming self-aware. A superintelligent agent that is self-aware will have an understanding of its own existence and its place in the world. It will know its capabilities, limitations, and goals. This level of awareness opens up new possibilities for AI to not only make decisions based on logic but also consider the ethical implications of its actions. Self-awareness will allow these agents to evaluate their own behavior, learn from mistakes, and adapt over time in ways that go beyond simple programming.

The Path Forward

To push beyond the limits of pre-training, AI researchers will need to rethink the way models are developed. It’s no longer enough to simply scale up data and compute. The next frontier lies in more efficient algorithms and leveraging new sources of data that go beyond the current internet-based systems.